Publication

A Deep Emulator for

Secondary Motion of 3D Characters

Abstract: Fast and light-weight methods for animating 3D characters are desirable in various applications such as computer games. We present a learning-based approach to enhance skinning-based animations of 3D characters with vivid secondary motion effects. We design a neural network that encodes each local patch of a character simulation mesh where the edges implicitly encode the internal forces between the neighboring vertices. The network emulates the ordinary differential equations of the character dynamics, predicting new vertex positions from the current accelerations, velocities and positions. Being a local method, our network is independent of the mesh topology and generalizes to arbitrarily shaped 3D character meshes at test time. We further represent per-vertex constraints and material properties such as stiffness, enabling us to easily adjust the dynamics in different parts of the mesh. We evaluate our method on various character meshes and complex motion sequences. Our method can be over 30 times more efficient than ground-truth physically based simulation, and outperforms alternative solutions that provide fast approximations.

1. Training data examples

We construct our training data by assigning randomized motions to a primitive, e.g. a sphere. All results in the later sections are predicted from the network trained on this dataset. We here provide three random motion examples of the sphere dataset. The color coding shows the dynamics.

Sphere (vtx#: 1015; motion: random; frames: 456):

2. Our Secondary Motion inference pipeline

Below is the basic pipeline of our method. Given the skinned animation mesh, we first build a uniform volumetric mesh and then set the constraints. At inference time, our network predics the secondary motion with respect to the input skinned volumetric mesh. Finally, we render the surface mesh interpolated from the predicted volumetric mesh.

3. Our results

Big vegas (Figure 1) (vtx#: 1468):

Running time: 0.012 s/frame on GPU; 0.017 s/frame on CPU.

Big vegas (vtx#: 39684):

Running time: 0.14 s/frame on GPU; 0.89 s/frame on CPU.

3.1 Homogeneous dynamics

Ortiz (vtx#: 1258)

Motion: cross jumps rotation; frames: 122:

Motion: jazz dancing; frames: 326:

Kaya (vtx#: 1417)

Motion: zombie scream; frames: 167:

Motion: dancing running man; frames: 240:

3.2 Non-homogeneous dynamics

As described in Figure 6, we set three different material properties to the character. In the legend at the bottom of the video, the gray area is the constraint, the red area has lower stiffness and the pink area has higher stiffness.

Michelle (Figure 6) (vtx#: 1105; motion: cross jumps; frames: 122):

Michelle (vtx#: 1105; motion: gangnam style; frames: 371):

Big vegas (vtx#: 1468; motion; cross jumps rotation; frames: 122):

3.3 Performance analysis

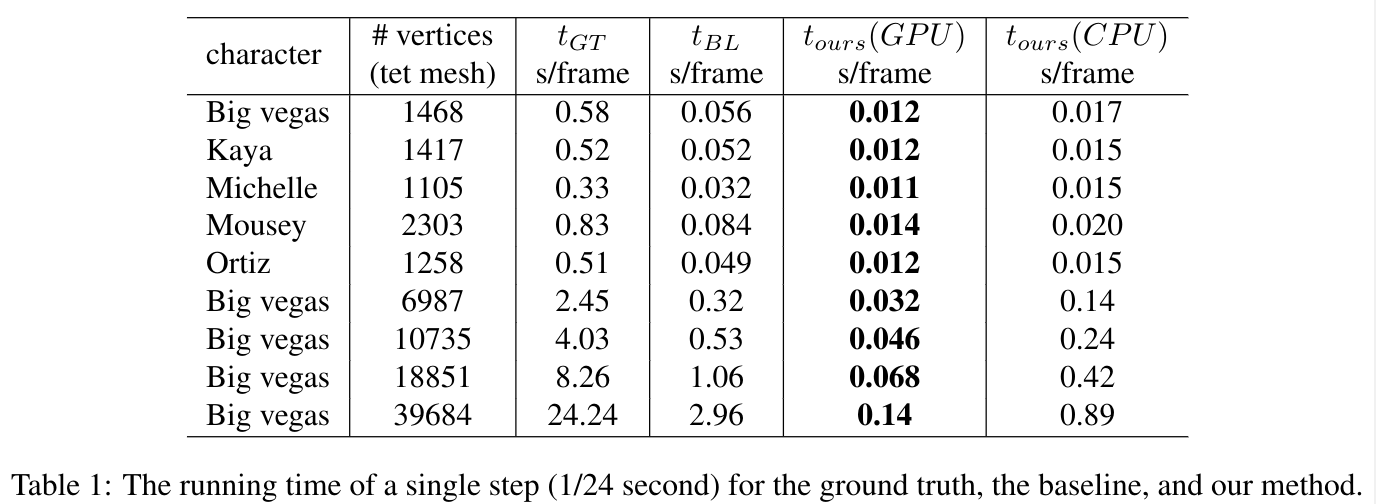

In Table 1, we show the speed t_ours of our method, as well as that of the ground truth method t_GT and a baseline method t_BL. We adopted the implicit backward Euler approach as ground truth and the faster explicit central differences integration as the baseline. For each method, we record the time to calculate the dynamic mesh but exclude other components such as initialization, rendering and mesh interpolation. Results indicate that our method, ran on GPU (CPU) is around 30(~20) times faster than the implicit integrator and 3(~2) times faster than the explicit integrator, per frame. With vertices number increasing, the performance of our method is rather more competitive.

4. Ablation study and comparions

Big vegas (Figure 7) (vtx#: 1468; motion: hip hop dancing; frames: 283):

5. Limitations

As discussed in the Conclusion Section, if the local geometric detail of a character is significantly different to those seen during training, e.g., the main local structures in the ear regions of the mousey character don't appear in the volumetric mesh of the sphere for training, the quality of our output decreases. One potential avenue for addressing this is to design more general primitives for training, beyond the tetrahedralized sphere. A thorough study on the type of training primitives and motion sequences can be an interesting future direction.

Mousey (vtx#: 2303; motion: swing dancing; frames: 627):

6. Easter egg

15 different animations of five different characters with predicted secondary motion:

Thanks! Stay healthy and happy.